find_clusters¶

-

ampligraph.discovery.find_clusters(X, model, clustering_algorithm=DBSCAN(algorithm='auto', eps=0.5, leaf_size=30, metric='euclidean', metric_params=None, min_samples=5, n_jobs=None, p=None), mode='entity')¶ Perform link-based cluster analysis on a knowledge graph.

The clustering happens on the embedding space of the entities and relations. For example, if we cluster some entities of a model that uses k=100 (i.e. embedding space of size 100), we will apply the chosen clustering algorithm on the 100-dimensional space of the provided input samples.

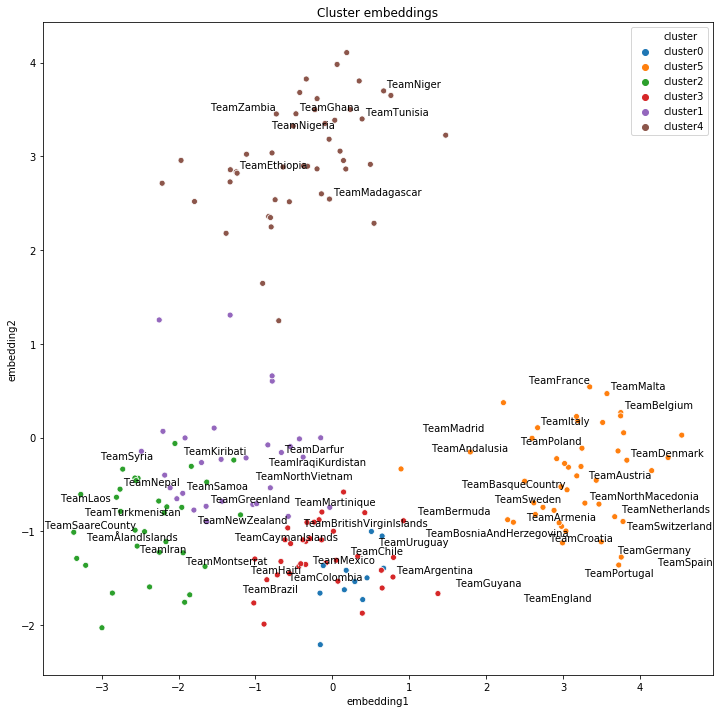

Clustering can be used to evaluate the quality of the knowledge embeddings, by comparing to natural clusters. For example, in the example below we cluster the embeddings of international football matches and end up finding geographical clusters very similar to the continents. This comparison can be subjective by inspecting a 2D projection of the embedding space or objective using a clustering metric.

The choice of the clustering algorithm and its corresponding tuning will greatly impact the results. Please see scikit-learn documentation for a list of algorithms, their parameters, and pros and cons.Clustering is exclusive (i.e. a triple is assigned to one and only one cluster).

- Parameters

X (ndarray, shape [n, 3] or [n]) – The input to be clustered.

Xcan either be the triples of a knowledge graph, its entities, or its relations. The argumentmodedefines whetherXis supposed an array of triples or an array of either entities or relations.model (EmbeddingModel) – The fitted model that will be used to generate the embeddings. This model must have been fully trained already, be it directly with

fit()or from a helper function such asampligraph.evaluation.select_best_model_ranking().clustering_algorithm (object) –

The initialized object of the clustering algorithm. It should be ready to apply the fit_predict method. Please see: scikit-learn documentation to understand the clustering API provided by scikit-learn. The default clustering model is sklearn’s DBSCAN with its default parameters.

mode (string) –

Clustering mode. Choose from:

- ’entity’ (default): the algorithm will cluster the embeddings of the provided entities.

- ’relation’: the algorithm will cluster the embeddings of the provided relations.

- ’triple’ : the algorithm will cluster the concatenation of the embeddings of the subject, predicate and object for each triple.

- Returns

labels – Index of the cluster each triple belongs to.

- Return type

ndarray, shape [n]

Examples

>>> # Note seaborn, matplotlib, adjustText are not AmpliGraph dependencies. >>> # and must therefore be installed manually as: >>> # >>> # $ pip install seaborn matplotlib adjustText >>> >>> import requests >>> import pandas as pd >>> import numpy as np >>> from sklearn.decomposition import PCA >>> from sklearn.cluster import KMeans >>> import matplotlib.pyplot as plt >>> import seaborn as sns >>> >>> # adjustText lib: https://github.com/Phlya/adjustText >>> from adjustText import adjust_text >>> >>> from ampligraph.datasets import load_from_csv >>> from ampligraph.latent_features import ComplEx >>> from ampligraph.discovery import find_clusters >>> >>> # International football matches triples >>> # See tutorial here to understand how the triples are created from a tabular dataset: >>> # https://github.com/Accenture/AmpliGraph/blob/master/docs/tutorials/ClusteringAndClassificationWithEmbeddings.ipynb >>> url = 'https://ampligraph.s3-eu-west-1.amazonaws.com/datasets/football.csv' >>> open('football.csv', 'wb').write(requests.get(url).content) >>> X = load_from_csv('.', 'football.csv', sep=',')[:, 1:] >>> >>> model = ComplEx(batches_count=50, >>> epochs=300, >>> k=100, >>> eta=20, >>> optimizer='adam', >>> optimizer_params={'lr':1e-4}, >>> loss='multiclass_nll', >>> regularizer='LP', >>> regularizer_params={'p':3, 'lambda':1e-5}, >>> seed=0, >>> verbose=True) >>> model.fit(X) >>> >>> df = pd.DataFrame(X, columns=["s", "p", "o"]) >>> >>> teams = np.unique(np.concatenate((df.s[df.s.str.startswith("Team")], >>> df.o[df.o.str.startswith("Team")]))) >>> team_embeddings = model.get_embeddings(teams, embedding_type='entity') >>> >>> embeddings_2d = PCA(n_components=2).fit_transform(np.array([i for i in team_embeddings])) >>> >>> # Find clusters of embeddings using KMeans >>> kmeans = KMeans(n_clusters=6, n_init=100, max_iter=500) >>> clusters = find_clusters(teams, model, kmeans, mode='entity') >>> >>> # Plot results >>> df = pd.DataFrame({"teams": teams, "clusters": "cluster" + pd.Series(clusters).astype(str), >>> "embedding1": embeddings_2d[:, 0], "embedding2": embeddings_2d[:, 1]}) >>> >>> plt.figure(figsize=(10, 10)) >>> plt.title("Cluster embeddings") >>> >>> ax = sns.scatterplot(data=df, x="embedding1", y="embedding2", hue="clusters") >>> >>> texts = [] >>> for i, point in df.iterrows(): >>> if np.random.uniform() < 0.1: >>> texts.append(plt.text(point['embedding1']+.02, point['embedding2'], str(point['teams']))) >>> adjust_text(texts)