NOTE: An interactive version of this tutorial is available on Colab.

Download the Jupyter notebook

Clustering and Classification using Knowledge Graph Embeddings¶

In this tutorial we will explore how to use the knowledge embeddings generated by a graph of international football matches (since the 19th century) in clustering and classification tasks. Knowledge graph embeddings are typically used for missing link prediction and knowledge discovery, but they can also be used for entity clustering, entity disambiguation, and other downstream tasks. The embeddings are a form of representation learning that allow linear algebra and machine learning to be applied to knowledge graphs, which otherwise would be difficult to do.

We will cover in this tutorial:

- Creating the knowledge graph (i.e. triples) from a tabular dataset of football matches

- Training the ComplEx embedding model on those triples

- Evaluating the quality of the embeddings on a validation set

- Clustering the embeddings, comparing to the natural clusters formed by the geographical continents

- Applying the embeddings as features in classification task, to predict match results

- Evaluating the predictive model on a out-of-time test set, comparing to a simple baseline

We will show that knowledge embedding clusters manage to capture implicit geographical information from the graph and that they can be a useful feature source for a downstream machine learning classification task, significantly increasing accuracy from the baseline.

Requirements¶

A Python environment with the AmpliGraph library installed. Please follow the install guide.

Some sanity check:

import numpy as np

import pandas as pd

import ampligraph

ampligraph.__version__

'1.1-dev'

Dataset¶

We will use the International football results from 1872 to 2019 available on Kaggle (public domain). It contains over 40 thousand international football matches. Each row contains the following information:

- Match date

- Home team name

- Away team name

- Home score (goals including extra time)

- Away score (goals including extra time)

- Tournament (whether it was a friendly match or part of a tournament)

- City where match took place

- Country where match took place

- Whether match was on neutral grounds

This dataset comes in a tabular format, therefore we will need to construct the knowledge graph ourselves.

import requests

url = 'https://ampligraph.s3-eu-west-1.amazonaws.com/datasets/football_graph.csv'

open('football_results.csv', 'wb').write(requests.get(url).content)

3033782

df = pd.read_csv("football_results.csv").sort_values("date")

df.isna().sum()

date 0

home_team 0

away_team 0

home_score 2

away_score 2

tournament 0

city 0

country 0

neutral 0

dtype: int64

Dropping matches with unknown score:

df = df.dropna()

The training set will be from 1872 to 2014, while the test set will be from 2014 to present date. Note that a temporal test set makes any machine learning task harder compared to a random shuffle.

df["train"] = df.date < "2014-01-01"

df.train.value_counts()

True 35714

False 5057

Name: train, dtype: int64

Knowledge graph creation¶

We are going to create a knowledge graph from scratch based on the match information. The idea is that each match is an entity that will be connected to its participating teams, geography, characteristics, and results.

The objective is to generate a new representation of the dataset where each data point is an triple in the form:

<subject, predicate, object>

First we need to create the entities (subjects and objects) that will form the graph. We make sure teams and geographical information result in different entities (e.g. the Brazilian team and the corresponding country will be different).

# Entities naming

df["match_id"] = df.index.values.astype(str)

df["match_id"] = "Match" + df.match_id

df["city_id"] = "City" + df.city.str.title().str.replace(" ", "")

df["country_id"] = "Country" + df.country.str.title().str.replace(" ", "")

df["home_team_id"] = "Team" + df.home_team.str.title().str.replace(" ", "")

df["away_team_id"] = "Team" + df.away_team.str.title().str.replace(" ", "")

df["tournament_id"] = "Tournament" + df.tournament.str.title().str.replace(" ", "")

df["neutral"] = df.neutral.astype(str)

Then, we create the actual triples based on the relationship between the entities. We do it only for the triples in the training set (before 2014).

triples = []

for _, row in df[df["train"]].iterrows():

# Home and away information

home_team = (row["home_team_id"], "isHomeTeamIn", row["match_id"])

away_team = (row["away_team_id"], "isAwayTeamIn", row["match_id"])

# Match results

if row["home_score"] > row["away_score"]:

score_home = (row["home_team_id"], "winnerOf", row["match_id"])

score_away = (row["away_team_id"], "loserOf", row["match_id"])

elif row["home_score"] < row["away_score"]:

score_away = (row["away_team_id"], "winnerOf", row["match_id"])

score_home = (row["home_team_id"], "loserOf", row["match_id"])

else:

score_home = (row["home_team_id"], "draws", row["match_id"])

score_away = (row["away_team_id"], "draws", row["match_id"])

home_score = (row["match_id"], "homeScores", np.clip(int(row["home_score"]), 0, 5))

away_score = (row["match_id"], "awayScores", np.clip(int(row["away_score"]), 0, 5))

# Match characteristics

tournament = (row["match_id"], "inTournament", row["tournament_id"])

city = (row["match_id"], "inCity", row["city_id"])

country = (row["match_id"], "inCountry", row["country_id"])

neutral = (row["match_id"], "isNeutral", row["neutral"])

year = (row["match_id"], "atYear", row["date"][:4])

triples.extend((home_team, away_team, score_home, score_away,

tournament, city, country, neutral, year, home_score, away_score))

Note that we treat some literals (year, neutral match, home score, away score) as discrete entities and they will be part of the final knowledge graph used to generate the embeddings. We limit the number of score entities by clipping the score to be at most 5.

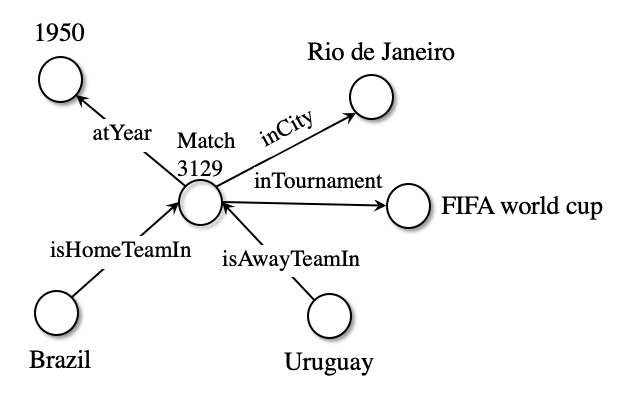

Below we can see visualise a subset of the graph related to the infamous Maracanazo:

The whole graph related to this match can be summarised by the triples below:

triples_df = pd.DataFrame(triples, columns=["subject", "predicate", "object"])

triples_df[(triples_df.subject=="Match3129") | (triples_df.object=="Match3129")]

| subject | predicate | object | |

|---|---|---|---|

| 34419 | TeamBrazil | isHomeTeamIn | Match3129 |

| 34420 | TeamUruguay | isAwayTeamIn | Match3129 |

| 34421 | TeamBrazil | loserOf | Match3129 |

| 34422 | TeamUruguay | winnerOf | Match3129 |

| 34423 | Match3129 | inTournament | TournamentFifaWorldCup |

| 34424 | Match3129 | inCity | CityRioDeJaneiro |

| 34425 | Match3129 | inCountry | CountryBrazil |

| 34426 | Match3129 | isNeutral | False |

| 34427 | Match3129 | atYear | 1950 |

| 34428 | Match3129 | homeScores | 1 |

| 34429 | Match3129 | awayScores | 2 |

Training knowledge graph embeddings¶

We split our training dataset further into training and validation, where the new training set will be used to the knowledge embedding training and the validation set will be used in its evaluation. The test set will be used to evaluate the performance of the classification algorithm built on top of the embeddings.

What differs from the standard method of randomly sampling N points to make up our validation set is that our data points are two entities linked by some relationship, and we need to take care to ensure that all entities are represented in train and validation sets by at least one triple.

To accomplish this, AmpliGraph provides the train_test_split_no_unseen function.

from ampligraph.evaluation import train_test_split_no_unseen

X_train, X_valid = train_test_split_no_unseen(np.array(triples), test_size=10000)

print('Train set size: ', X_train.shape)

print('Test set size: ', X_valid.shape)

Train set size: (382854, 3)

Test set size: (10000, 3)

AmpliGraph has implemented several Knowledge Graph Embedding models (TransE, ComplEx, DistMult, HolE), but to begin with we’re just going to use the ComplEx model, which is known to bring state-of-the-art predictive power.

The hyper-parameter choice was based on the best results we have found so far for the ComplEx model applied to some benchmark datasets used in the knowledge graph embeddings community. This tutorial does not cover hyper-parameter tuning.

from ampligraph.latent_features import ComplEx

model = ComplEx(batches_count=50,

epochs=300,

k=100,

eta=20,

optimizer='adam',

optimizer_params={'lr':1e-4},

loss='multiclass_nll',

regularizer='LP',

regularizer_params={'p':3, 'lambda':1e-5},

seed=0,

verbose=True)

Lets go through the parameters to understand what’s going on:

batches_count: the number of batches in which the training set is split during the training loop. If you are having into low memory issues than settings this to a higher number may help.epochs: the number of epochs to train the model for.k: the dimensionality of the embedding space.eta($\eta$) : the number of negative, or false triples that must be generated at training runtime for each positive, or true triple.optimizer: the Adam optimizer, with a learning rate of 1e-4 set via the optimizer_params kwarg.loss: pairwise loss, with a margin of 0.5 set via the loss_params kwarg.regularizer: $L_p$ regularization with $p=3$, i.e. l3 regularization. $\lambda$ = 1e-5, set via the regularizer_params kwarg.seed: random seed, used for reproducibility.verbose- displays a progress bar.

Training should take around 10 minutes on a modern GPU:

import tensorflow as tf

tf.logging.set_verbosity(tf.logging.ERROR)

model.fit(X_train)

Average Loss: 0.400814: 100%|██████████| 300/300 [09:58<00:00, 2.01s/epoch]

Evaluating knowledge embeddings¶

AmpliGraph aims to follow scikit-learn’s ease-of-use design philosophy and simplify everything down to fit, evaluate, and predict functions.

However, there are some knowledge graph specific steps we must take to ensure our model can be trained and evaluated correctly. The first of these is defining the filter that will be used to ensure that no negative statements generated by the corruption procedure are actually positives. This is simply done by concatenating our train and test sets. Now when negative triples are generated by the corruption strategy, we can check that they aren’t actually true statements.

filter_triples = np.concatenate((X_train, X_valid))

For this we’ll use the evaluate_performance function:

X- the data to evaluate on. We’re going to use our test set to evaluate.model- the model we previously trained.filter_triples- will filter out the false negatives generated by the corruption strategy.use_default_protocol- specifies whether to use the default corruption protocol. If True, then subj and obj are corrupted separately during evaluation.verbose- displays a progress bar.

from ampligraph.evaluation import evaluate_performance

ranks = evaluate_performance(X_valid,

model=model,

filter_triples=filter_triples,

use_default_protocol=True,

verbose=True)

100%|██████████| 10000/10000 [02:09<00:00, 77.33it/s]

We’re going to use the mrr_score (mean reciprocal rank) and hits_at_n_score functions.

- mrr_score: The function computes the mean of the reciprocal of elements of a vector of rankings ranks.

- hits_at_n_score: The function computes how many elements of a vector of rankings ranks make it to the top n positions.

from ampligraph.evaluation import mr_score, mrr_score, hits_at_n_score

mr = mr_score(ranks)

mrr = mrr_score(ranks)

print("MRR: %.2f" % (mrr))

print("MR: %.2f" % (mr))

hits_10 = hits_at_n_score(ranks, n=10)

print("Hits@10: %.2f" % (hits_10))

hits_3 = hits_at_n_score(ranks, n=3)

print("Hits@3: %.2f" % (hits_3))

hits_1 = hits_at_n_score(ranks, n=1)

print("Hits@1: %.2f" % (hits_1))

MRR: 0.26

MR: 4365.06

Hits@10: 0.36

Hits@3: 0.29

Hits@1: 0.19

We can interpret these results by stating that the model will rank the correct entity within the top-3 possibilities 29% of the time.

By themselves, these metrics are not enough to conclude the usefulness of the embeddings in a downstream task, but they suggest that the embeddings have learned a reasonable representation enough to consider using them in more tasks.

Clustering and embedding visualization¶

To evaluate the subjective quality of the embeddings, we can visualise the embeddings on 2D space and also cluster them on the original space. We can compare the clustered embeddings with natural clusters, in this case the continent where the team is from, so that we have a ground truth to evaluate the clustering quality both qualitatively and quantitatively.

Requirements:

- seaborn

- adjustText

- incf.countryutils

For seaborn and adjustText, simply install them with pip install seaborn adjustText.

For incf.countryutils, do the following steps:

git clone https://github.com/wyldebeast-wunderliebe/incf.countryutils.git

cd incf.countryutils

pip install .```

```python

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

import seaborn as sns

from adjustText import adjust_text

from incf.countryutils import transformations

%matplotlib inline

We create a map from the team ID (e.g. “TeamBrazil”) to the team name (e.g. “Brazil”) for visualization purposes.

id_to_name_map = {**dict(zip(df.home_team_id, df.home_team)), **dict(zip(df.away_team_id, df.away_team))}

We now create a dictionary with the embeddings of all teams:

teams = pd.concat((df.home_team_id[df["train"]], df.away_team_id[df["train"]])).unique()

team_embeddings = dict(zip(teams, model.get_embeddings(teams)))

We use PCA to project the embeddings from the 200 space into 2D space:

embeddings_2d = PCA(n_components=2).fit_transform(np.array([i for i in team_embeddings.values()]))

We will cluster the teams embeddings on its original 200-dimensional space using the find_clusters in our discovery API:

from ampligraph.discovery import find_clusters

from sklearn.cluster import KMeans

clustering_algorithm = KMeans(n_clusters=6, n_init=50, max_iter=500, random_state=0)

clusters = find_clusters(teams, model, clustering_algorithm, mode='entity')

This helper function uses the incf.countryutils library to translate country names to their corresponding continents.

def cn_to_ctn(country):

try:

return transformations.cn_to_ctn(id_to_name_map[country])

except KeyError:

return "unk"

This dataframe contains for each team their projected embeddings to 2D space via PCA, their continent and the KMeans cluster. This will be used alongisde Seaborn to make the visualizations.

plot_df = pd.DataFrame({"teams": teams,

"embedding1": embeddings_2d[:, 0],

"embedding2": embeddings_2d[:, 1],

"continent": pd.Series(teams).apply(cn_to_ctn),

"cluster": "cluster" + pd.Series(clusters).astype(str)})

We plot the results on a 2D scatter plot, coloring the teams by the continent or cluster and also displaying some individual team names.

We always display the names of the top 20 teams (according to FIFA rankings) and a random subset of the rest.

top20teams = ["TeamBelgium", "TeamFrance", "TeamBrazil", "TeamEngland", "TeamPortugal", "TeamCroatia", "TeamSpain",

"TeamUruguay", "TeamSwitzerland", "TeamDenmark", "TeamArgentina", "TeamGermany", "TeamColombia",

"TeamItaly", "TeamNetherlands", "TeamChile", "TeamSweden", "TeamMexico", "TeamPoland", "TeamIran"]

def plot_clusters(hue):

np.random.seed(0)

plt.figure(figsize=(12, 12))

plt.title("{} embeddings".format(hue).capitalize())

ax = sns.scatterplot(data=plot_df[plot_df.continent!="unk"], x="embedding1", y="embedding2", hue=hue)

texts = []

for i, point in plot_df.iterrows():

if point["teams"] in top20teams or np.random.random() < 0.1:

texts.append(plt.text(point['embedding1']+0.02, point['embedding2']+0.01, str(point["teams"])))

adjust_text(texts)

The first visualisation of the 2D embeddings shows the natural geographical clusters (continents), which can be seen as a form of the ground truth:

plot_clusters("continent")

We can see above that the embeddings learned geographical similarities even though this information was not explicit on the original dataset.

Now we plot the same 2D embeddings but with the clusters found by K-Means:

plot_clusters("cluster")

We can see that K-Means found very similar cluster to the natural geographical clusters by the continents. This shows that on the 200-dimensional embedding space, similar teams appear close together, which can be captured by a clustering algorithm.

Our evaluation of the clusters can be more objective by using a metric such as the adjusted Rand score, which varies from -1 to 1, where 0 is random labelling and 1 is a perfect match:

from sklearn import metrics

metrics.adjusted_rand_score(plot_df.continent, plot_df.cluster)

0.39274828260196304

Classification¶

We will use the knowledge embeddings to predict future matches as a classification problem.

We can model it as a multiclass problem with three classes: home team wins, home team loses, draw.

The embeddings are used directly as features to a XGBoost classifier.

First we need to determine the target:

df["results"] = (df.home_score > df.away_score).astype(int) + \

(df.home_score == df.away_score).astype(int)*2 + \

(df.home_score < df.away_score).astype(int)*3 - 1

df.results.value_counts(normalize=True)

0 0.486473

2 0.282456

1 0.231071

Name: results, dtype: float64

Now we create a function that extracts the features (knowledge embeddings for home and away teams) and the target for a particular subset of the dataset:

def get_features_target(mask):

def get_embeddings(team):

return team_embeddings.get(team, np.full(200, np.nan))

X = np.hstack((np.vstack(df[mask].home_team_id.apply(get_embeddings).values),

np.vstack(df[mask].away_team_id.apply(get_embeddings).values)))

y = df[mask].results.values

return X, y

clf_X_train, y_train = get_features_target((df["train"]))

clf_X_test, y_test = get_features_target((~df["train"]))

clf_X_train.shape, clf_X_test.shape

((35714, 400), (5057, 400))

Note that we have 200 features by team because the ComplEx model uses imaginary and real number for its embeddings, so we have twice as many parameters as defined by k=100 in its model definition.

We also have some missing information from the embeddings of the entities (i.e. teams) that only appear in the test set, which are unlikely to be correctly classified:

np.isnan(clf_X_test).sum()/clf_X_test.shape[1]

105.0

First install xgboost with pip install xboost.

from xgboost import XGBClassifier

Create a multiclass model with 500 estimators:

clf_model = XGBClassifier(n_estimators=500, max_depth=5, objective="multi:softmax")

Fit the model using all of the training samples:

clf_model.fit(clf_X_train, y_train)

XGBClassifier(base_score=0.5, booster='gbtree', colsample_bylevel=1,

colsample_bytree=1, gamma=0, learning_rate=0.1, max_delta_step=0,

max_depth=5, min_child_weight=1, missing=None, n_estimators=500,

n_jobs=1, nthread=None, objective='multi:softprob',

random_state=0, reg_alpha=0, reg_lambda=1, scale_pos_weight=1,

seed=None, silent=True, subsample=1)

The baseline accuracy for this problem is 47%, as that is the frequency of the most frequent class (home team wins):

df[~df["train"]].results.value_counts(normalize=True)

0 0.471030

2 0.287325

1 0.241645

Name: results, dtype: float64

metrics.accuracy_score(y_test, clf_model.predict(clf_X_test))

0.5378683013644453

In conclusion, while the baseline for this classification problem was 47%, with just the knowledge embeddings alone we were able to build a classifier that achieves 54% accuracy.

As future work, we could add more features to the model (not embeddings related) and tune the model hyper-parameters.